Hello, I'm Takanori Machida from our AI Trust Research Centre. Today, I would like to introduce an exciting new AI core engine – the Adversarial Example Attack Detector – which is now available from Fujitsu Kozuchi.

This blog is the fifth in our five-week blog series. You can read the last post, " Introducing Camera Angle Change Detection " here!

The Adversarial Example Attack Detector is a technology that detects attacks causing the false identification of image recognition AI in real-time. It is one of Fujitsu Kozuchi’s AI core engines, which enables the fast testing of cutting-edge AI technologies developed by Fujitsu.

Reports have revealed that it’s possible deliberately to make AI misidentify objects by attaching special patterns, such as patches, to them. This type of attack is known as an Adversarial Example Attack or Adversarial Patch Attack. The existing technology against adversarial example attacks can recognize that an attack happens, but it does not output what object was deceived and how. Every time a new type of patch attack appears, the AI needs to be retrained. This makes it difficult to apply quickly. To overcome this issue, we have developed the Adversarial Example Attack Detector.

Benefits of the Adversarial Example Attack Detector and how to use it

The principal value of the Adversarial Example Attack Detector is that it can detect false identification of image recognition AI caused by attacks in real-time, and it can identify the object that the AI misidentified and detail exactly how it was misidentified.

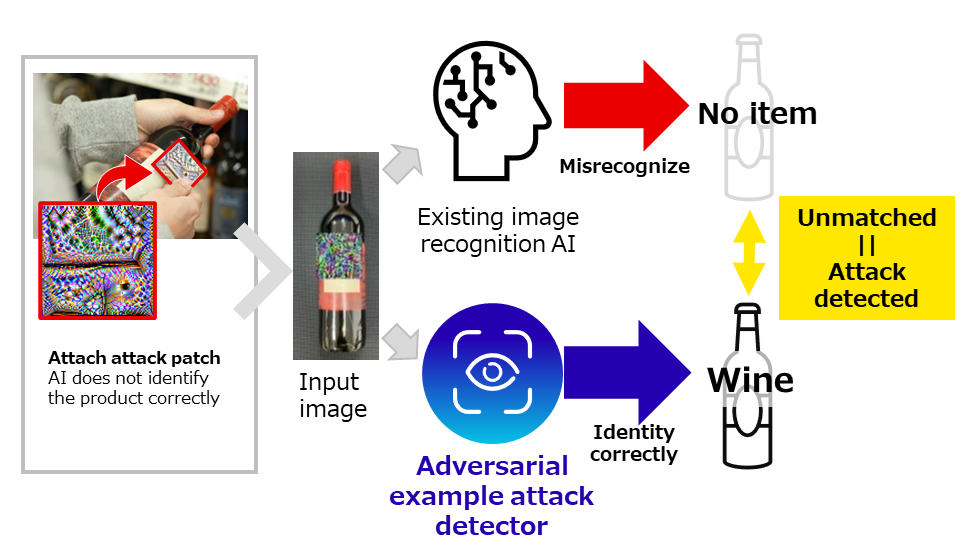

Let me explain with an example where an attack patch is attached to a product in a store. When a wine bottle has an attack patch attached, the existing image recognition AI cannot identify the product correctly. The AI may misidentify the wine as a different product, or it may not recognize the existence of the item at all, resulting in no identification at all. If a camera is used to monitor self-checkout registers and uses image recognition AI to check if products have been scanned, misidentifying the wine with the attack patch as "no recognition result (no item)" means that it won't be considered fraud even if the wine isn't scanned. This overlooks fraud and as a result, it can lead to a loss in sales.

The Adversarial Example Attack Detector can correctly identify objects, even if they have an attack patch attached. If the recognition result does not match the existing image recognition AI's result, it detects an attack that obstructs the correct recognition of the image recognition AI. In the example shown in the figure, the existing image recognition AI's result is "no item", and the Adversarial Example Attack Detector's result is "wine". Therefore, we know that an attack occurred that caused the wine to be misidentified as "no item". As a result of it being able to detect fraud in real-time like this, it is possible to take the necessary actions early on.

This technology can be utilized in the following ways: it can detect unauthorized car entry and car tracking avoidance by attaching patches on license plates, as well as detecting suspicious individuals, who disguise their presence by wearing clothes with printed patches.

In addition, our Adversarial Example Attack Detector is easy to apply. When applying the Adversarial Example Attack Detector, there is no need to modify the existing image recognition AI. Neither do you need to modify the AI model. Also, the Adversarial Example Attack Detector can be used externally, making it easy to apply. Even when a new type of patch attack appears, there is no need to retrain the AI, reducing the degree of operation effort required.

Features of Adversarial Example Attack Detector technology

The Adversarial Example Attack Detector, using Fujitsu's unique explainable ensemble learning with multiple techniques to reduce the impact of the patches, enables the correct identification of the target object to be achieved quickly. We also provide a customization tool to detect attacks according to the objects that the image recognition AI has learned, making it easy to apply in the field. This technology is an outcome of our joint research program with Ben-Gurion University of the Negev.

November 16, 2021 Press Release

Fujitsu and Ben-Gurion University Embark on Joint Research at New Center in Israel for Precise and Secure AI

Interested in testing the Fujitsu Kozuchi?

The Adversarial Example Attack Detector can be applied to various image recognition AIs. It is of value to anyone interested in advanced efforts to prepare for potential future threats. Please contact us for verification using your own AI model, from attack to countermeasures.

For a demonstration or to test our Adversarial Example Attack Detector, please contact us here: